Buffer Engineering Report

September 2016

Key stats

Requests for buffer.com

196 m

Avg. response for buffer.com

226ms

Requests for api.bufferapp.com

1.04 b

Avg. response for api.bufferapp.com

86.8 ms

Bugs & Quality

- Code reviews given: 68% of Pull Requests were reviewed

- 4 S1 (severity 1) bugs: 4 opened, 3 closed. (42% smashed)

- 24 S2 (severity 2) bugs: 9 opened, 13 closed (59% smashed)

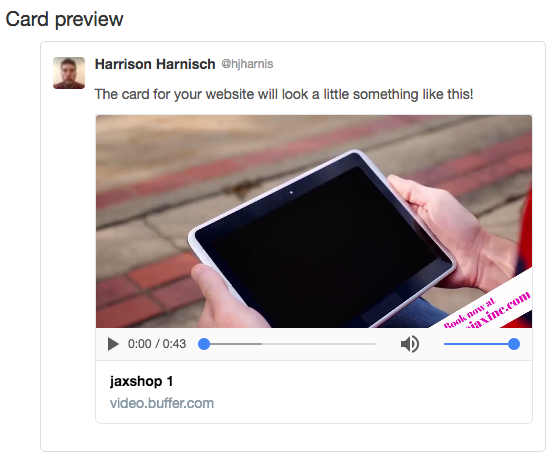

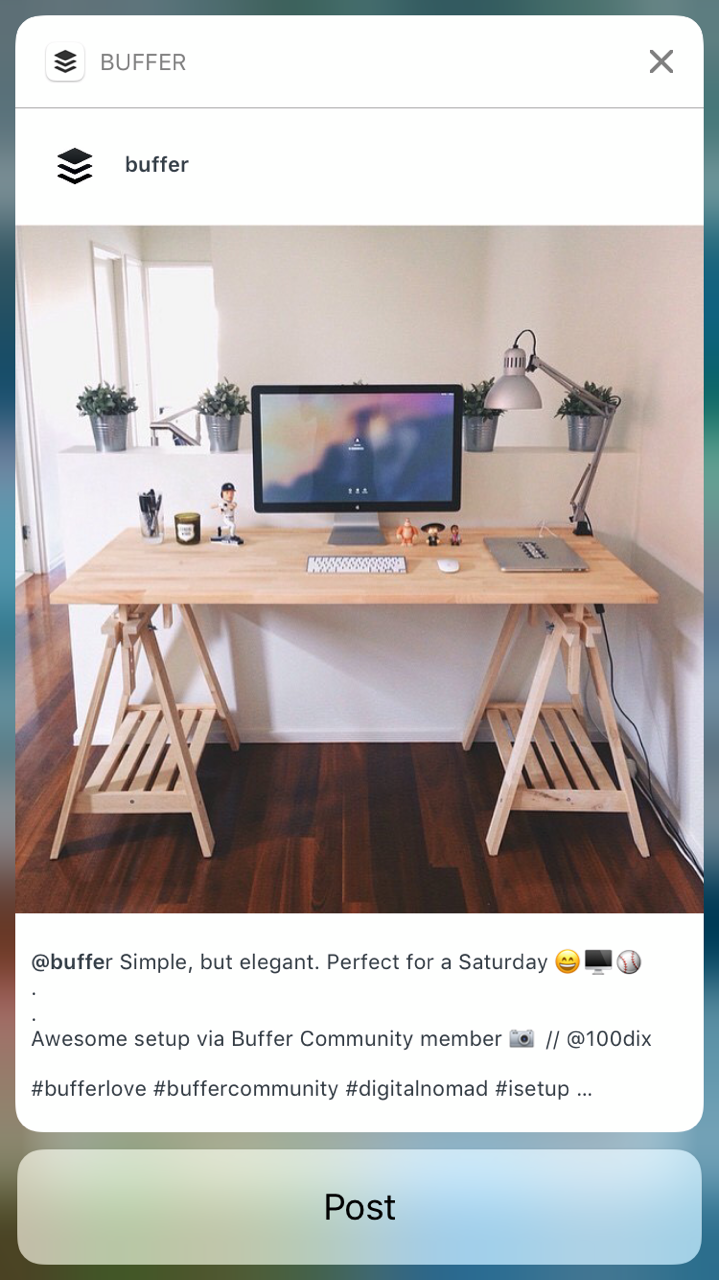

Buffer video sharing is more foolproof now

If you’ve not used Buffer to schedule videos before, you can upload a video and share it perfectly formatted to all the social media networks Buffer supports. Here’s what it looks like for twitter:

This month, Harrison gave Buffer’s video sharing a total overhaul and built it out into its own separate service.

Buffer videos are now set up as a microservice independent of the web app. This is what one of Brian’s latest videos looks like on the service!

Previously, the video service lived alongside the main app, so deployments and scaling were coupled between the Buffer web app and the video service. They also shared the same database (MongoDB), so a problem with the video service could impact Buffer web app. Having the video service as a separate service avoids that single point of failure.

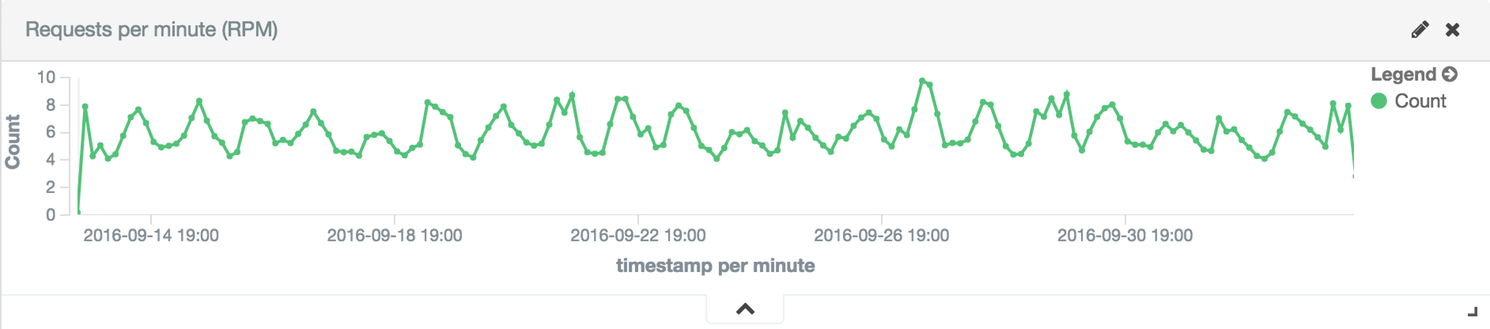

The service has been handling 100% of traffic since September 13. It’s been on autopilot and stable for the last two weeks. The video pages are rendered on the server using React because other services (like Twitter and Facebook) will need to be able to scrape these video pages. It’s built out with components from our shared Buffer Components library.

Key Stats:

~185K requests since Sept. 13

Avg. Response Time: 272.553ms

Team update: Welcome, Harrison!

Speaking of Harrison…We’re so happy announce that Harrison has accepted our offer to join the team full-time! Harrison has had an immediate impact on the team. He has rebuilt our video player service from scratch, started a new react components library and has taken over development of our links count service which will soon handle or most traffic API endpoint.

When he’s not coding, Harrison plays both guitar and drums, shares a long-distance running passion with his wife Morgan and has the two cutest German Shepard dogs to ever grace our Zoom calls. Welcome Harrison!

Great job! Joe at DroidCon

We were all cheering Joe on from the sidelines as he went to Vienna to speak at DroidCon.

His talk was Android TV: Building Apps with Google’s LeanBack Library. We’re super proud of his achievements and of him sharing his expertise with the wider Android community!

Career growth framework for engineers

Sunil, Niel and I are rolling out a new career growth framework for engineers and we’re excited to share it with the whole team in the coming month!

The framework maps out the expectations at each level of engineering and how engineering work at that level is conducted, opening up growth and advancement as a continuous conversation.

The goal is to bring greater fairness and transparency to growth and promotions and provide a clear path for our engineers to advance as individual contributors, without the need to become managers or face stagnation. Keep your eyes peeled for the framework itself to make an appearance soon.

Improving our code review process

In our continuing quest for great code and maximum learning from each other, code reviews are incredibly important.

Code reviews given remained static at 68% of pull requests in September, compared to 69% in August. So we made some changes to our code review process this month.

We switched to GitHub’s new review system to communicate with clarity during reviews by requesting changes or approving reviews.

Additionally, Marcus implemented Danger, which automates common code review chores, on our Jenkins setup for the Buffer web-app repo and Android repo. Danger now reminds us to assign reviewers, adds cautionary labels for high-impact changes like core files or database queries, and warns for large pull requests.

We’re hopeful this will lead to more code reviews and more learning!

Fast and easy image resizing coming in October

Our idea: What if users could upload any image to Buffer and have it go out seamlessly for each network [gifs too!].

In the past, we’ve resized images to the lowest common denominator for all networks. Most recently, Mike has been hard at work on building out a new image resizing service.

However, with the rise in social media to make each channel more unique and more specific to each network, this approach was starting to fail at scaling well and often lead to some disappointing image problems for our users.

So we built a new image resizing service in Node that will be specifically focus on resizing images for our Buffer users.

This is part of our service oriented architecture push and we are majorly excited to see this service go live in October. This not only improves images for all our users, but it’ll also be a major milestone and step forward for Buffer’s new architecture.

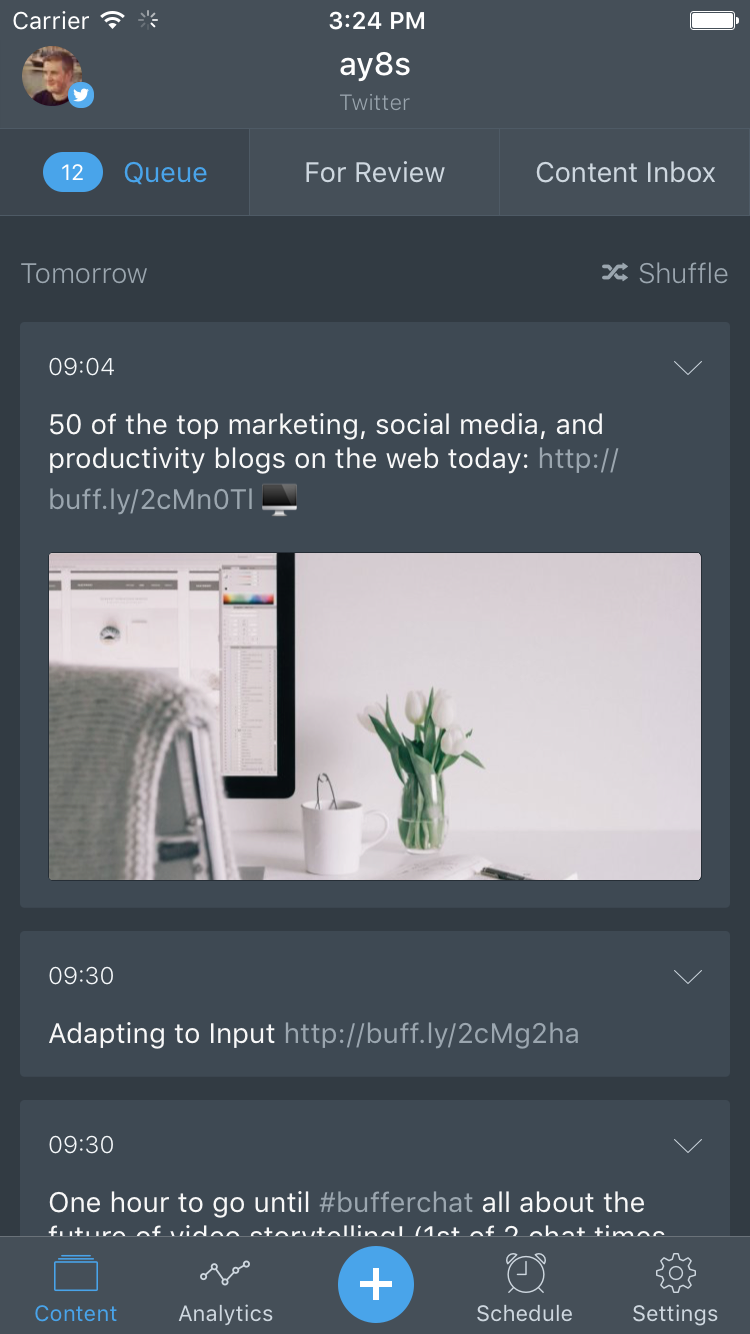

Mobile: Rich notifications in iOS 10; try our new dark mode!

On the mobile side, Joe and Marcus have rethought the entire structure of the Buffer Android app, to make the workspace clean and tidy and allow the team to move much faster with the overhaul of the Android app that is underway. Joe wrote up this awesome post on what the restructuring process looked like and all that the Android team learned!

With iOS 10 out in the wild, we pushed out our v6.0 update of the iOS app, with it came all of the AsyncDisplayKit tweaks made to improve the performance of our update tables to achieve the magical 60 frames-per-second needed for smooth scrolling. You can find more details about how and why we implemented AsyncDisplayKit within this great post by Andy.

We also spent a bit of time implementing a sweet looking dark mode. With rumors it was coming in iOS 10 for the iPhone 7, we had 90% of the app themed with dark mode within a single Saturday hack session. While no dark mode came in iOS 10 (yet) we’re hopeful the rumors come true soon. In the meantime you can enable it within the Settings tab.

We also have various iOS 10 improvements in the app, including improved notifications using iOS 10’s new richer notifications. When you receive a Instagram Reminder you’ll now see the thumbnail of the photo or video you’re about to share. Using 3D touch on that notification will show you a preview of the whole Instagram post with a quick action to post.

With all these new additions, Apple featured Buffer in a few App Store Front Page categories including “Enhanced for 3D Touch” and “Turbo-Charged Notifications.” We’re super excited to add even more iOS 10 features soon!

Open source spreading across the globe

This month was busy for our iOS open source projects. With iOS 10 released and out in the wild, Jordan and Andy took some time to update our iOS projects (the BFRGifRefreshControl, BFRImageViewer and soon BufferSwiftKit) to build against iOS 9 instead of iOS 8. This allows the projects to stay current and minimize technical debt.

In addition, the BFRImageViewer has been fully localized in Spanish, some bugs have been squashed and it now handles iOS’ status bar using the modern API. This means there are no more pesky warnings in the project. Further, the BFRGifRefreshControl now supports haptic feedback if you have an iPhone 7 or 7 Plus!

There are some exciting new things we’ve begun to explore as part of the iOS team’s move to AsyncDisplayKit within Buffer for iOS. We plan to open source a few projects utilizing it, and as part of that we hope to have our media picker found in Buffer for iOS fully open source next month as well!

Currently, our open source projects are used in 118 apps and have been downloaded 5,428 times. We hope to grow those numbers to try and share our code with other developers!

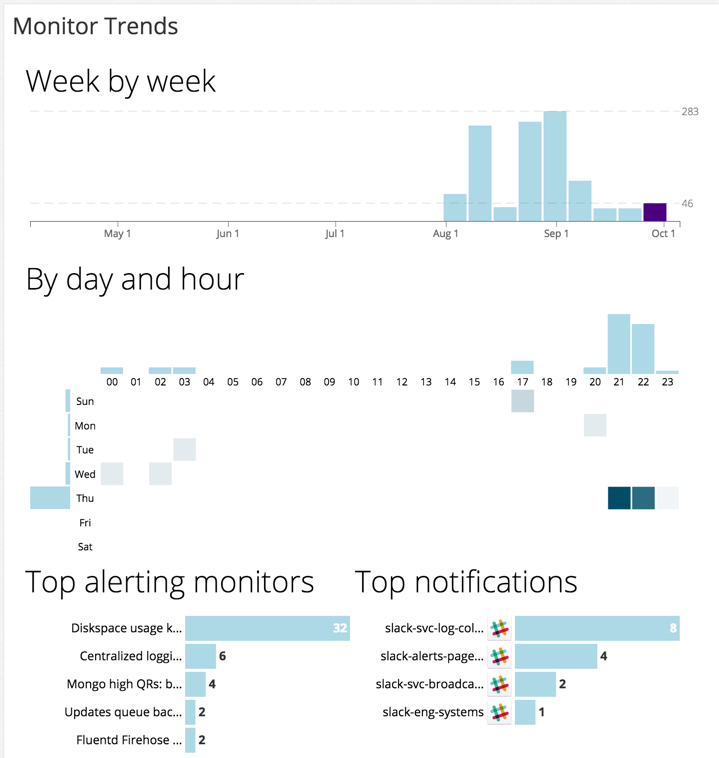

Kubernetes Cluster Progress

Key Stats

Pods: 48

Nodes: 7

Deployments: 118 in Sept.

This month we continued with our adoption of Kubernetes to manage our container clusters so that we can deploy and scale services more effectively. (Curious to learn more what Kubernetes is and why it’s awesome? We love The Children’s Illustrated Guide to Kubernetes for a quick explanation.)

In September we moved forward substantially, now running 48 pods in production with two more services fully moved over. Both the video service and links service are running on Kubernetes with the video service fully launched and the links service to begin taking traffic this week. More nodes have been added in September and our Kubernetes cluster is now at a total of 7 nodes. We’re excited to learn and share more about our increasing use of Kubernetes in production as we move more code out of the Buffer web monolith and into microservices.

As we adopt service orientated architecture on k8s, monitoring is one of the most important parts of the system. Without great monitoring, services might fail for a while without being noticed. There are many more services now to keep an eye on than when we were a monolith, so we’ve been using DataDog to manage this task.

Pioneered by Eric, we’ve improved our monitoring systems and reliability with DataDog to now have 23 monitors in place to help us keep an eye on all services.

We’ve also made progress on ease of deployment to k8s by using standardizing labels to identify parts of the infrastructure you’re concerned with, like so:

labels:

app: analytics

service: worker-elasticsearch

track: stable

// now you can select all pods within the analytics app

// kubectl get po -l app=analytics

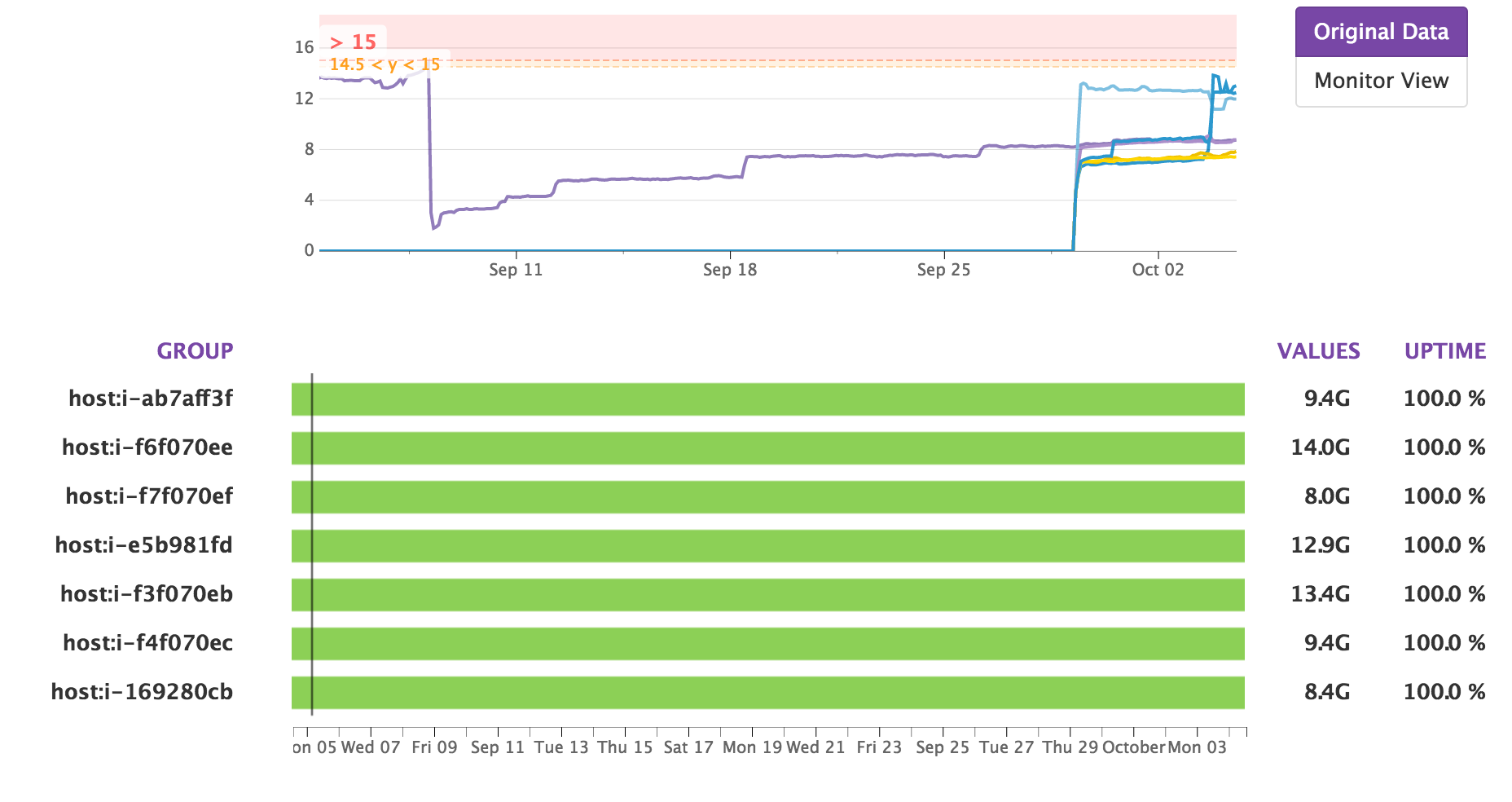

Memory Utilization (per node):

We’ve been under warning and error levels for each minion for the past month. It looks like we’re probably going to need to expand the number of minions in the Kubernetes cluster soon. Another big deployment (like the upcoming links service) could put us over the limit.

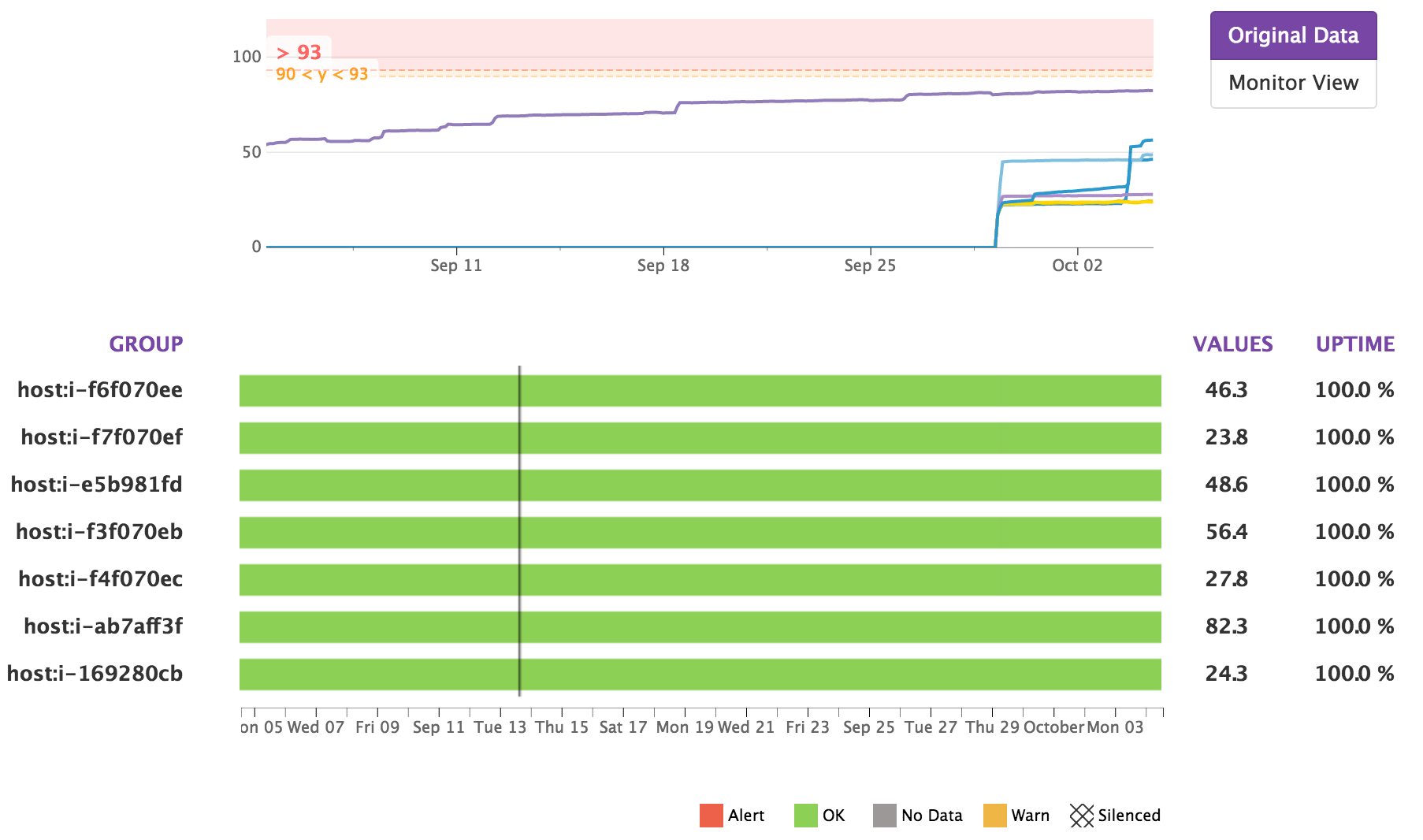

Disk Usage (per node):

Disk utilization has been healthy over the past month. It would be worth taking a look at the host that has slowly crept up.

Introducing Centralized Log Collector

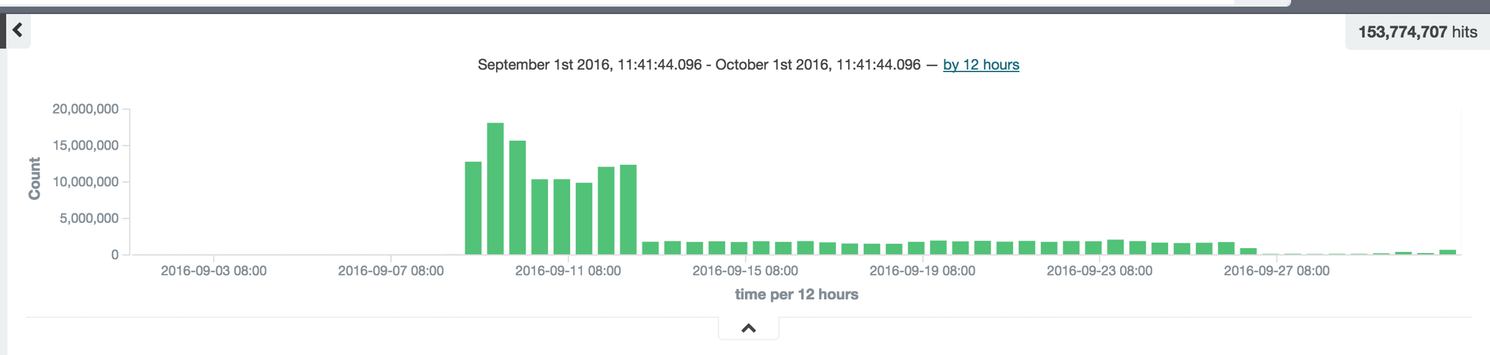

Log Messages: 153,774,707

In September, Steven implemented the first version of centralized log collector (powered by td-agent). This runs on Kubernetes node level to collect log messages from all containers and index them to our dedicated Elasticsearch cluster.

This greatly helps us by making logging as easy as putting messages to stdout. We will continue innovating in this area so the log collector is able to aggregate logs from transmission control protocol (TCP) forward port. Super excited for the next iteration.

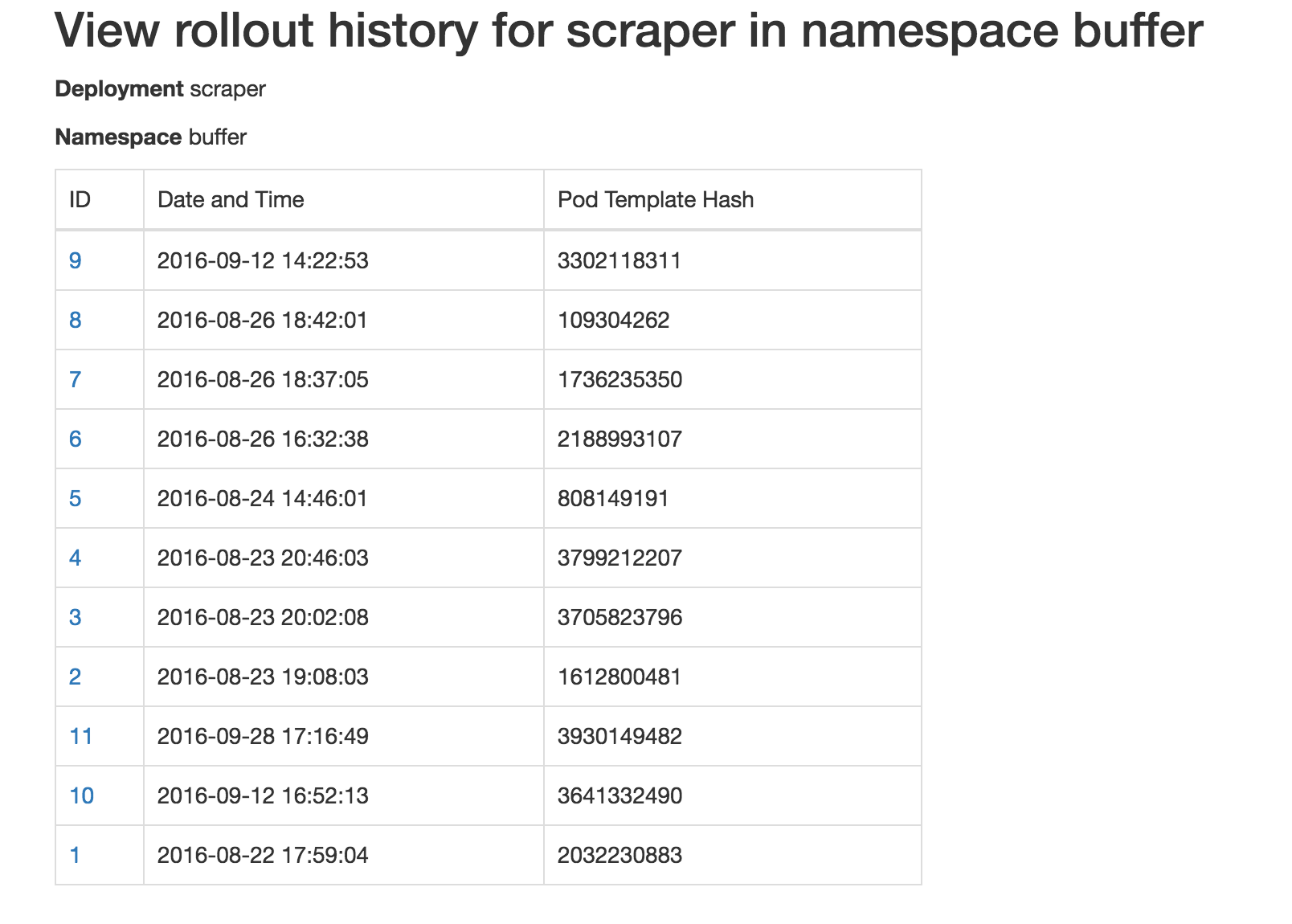

Kubedash Made Deployment Rollback Easier

In September, Adnan implemented the first version of Kube Dashboard that could help engineers rollback to any previous deployment version in case of something goes wrong.

This is a critical feature for a safer development process. It’s often challenging to find a previous deployment and redeploy it in a hurry.

Analytics: We decreased processing time by 65%!

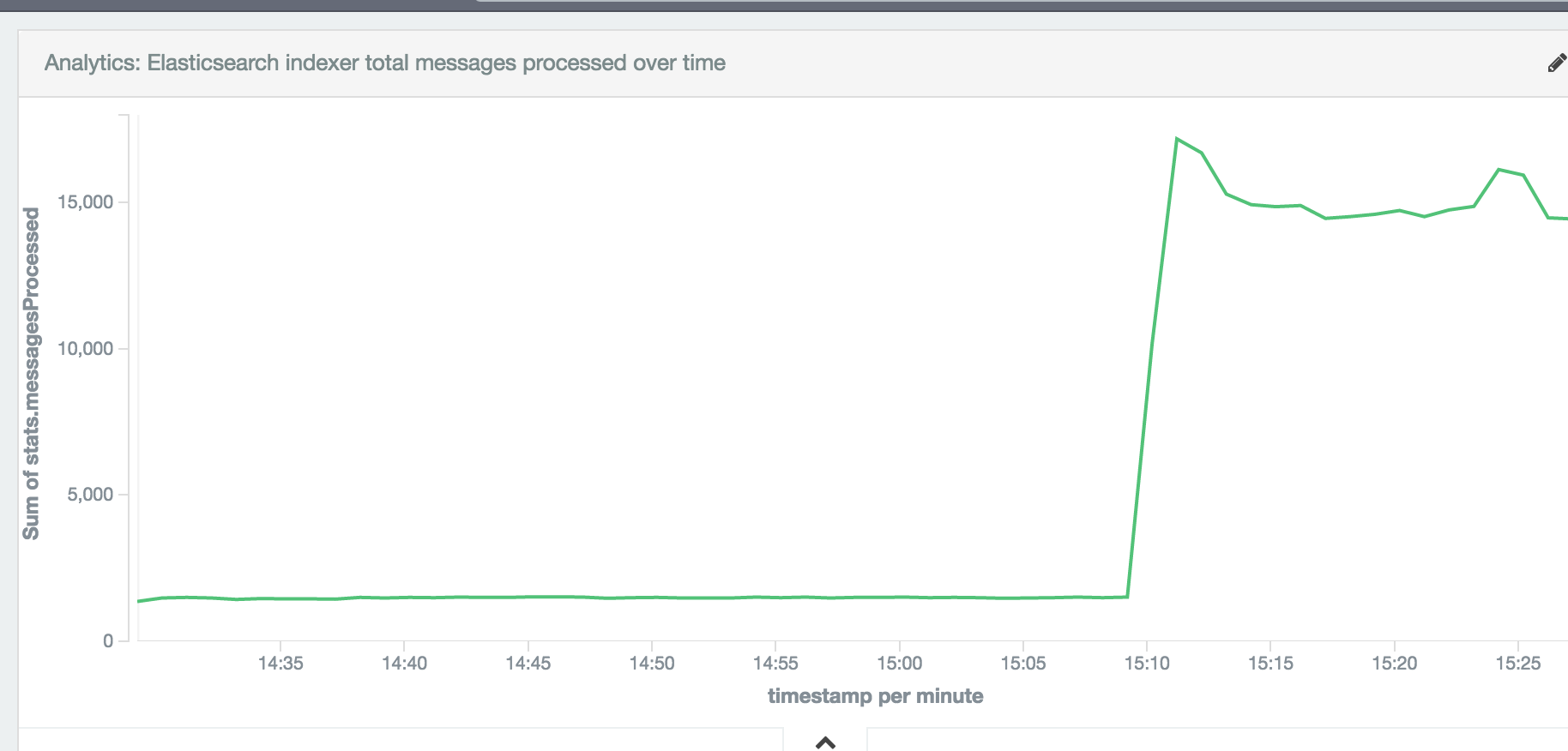

Tigran removed 22 analytics workers running on utility servers and replaced them with new Analytics Containers running into Kubernetes.

With this switch we now have a completely separate deployment process for these workers that is super fast and easier to iterate. Also all these workers are currently running on PHP 7 and fully monitored on Datadog and Kibana.

The processing time of all these analytics workers was decreased by 65% which dramatically increased the total number of messages processed:

Tigran also built out two new API endpoints that are powered by Twitter Engagements Gnip data stored in a separate analytics Elasticsearch cluster. This allows us to serve much richer Twitter analytics to our Buffer for Business customers.

Introducing Buffer Components

Harrison also worked together with Steve to build out the beginnings of a component library, so we can reuse code much more, allowing us to build faster and more reliably.

All components are functional and stateless, which makes them simple and flexible. These can be used to render state from any datastore (like Redux, or Flux).

We currently have 7 components, which are all in use by the Buffer video service (more on that below!) Components are fully documented, tested and shipped out to NPM under our @bufferapp/components namespace. We’ve also got a storybook deployed to GitHub so you can try out the components live!

Over to you

Is there anything you’d love to learn more about? Anything we could share more of? We’d love to hear from you in the comments!

Check out more reports from September 2016:

Try Buffer for free

190,000+ creators, small businesses, and marketers use Buffer to grow their audiences every month.