Just over a year ago, my role at Buffer shifted, and I became a Growth Marketing Manager working on Buffer’s Growth Marketing team. This change happened at a time when we needed to quickly scale how we run experiments to start looking for improvements to their performance.

Experiments are an important component in marketing teams and are a proven method for understanding the causal impact of a change and improving marketing strategy iteratively and quickly. Not only does running experiments help us gain a much better understanding of what works and what doesn’t, it also helps us drive meaningful impact on our key metrics in a controlled, measurable way.

For Buffer’s Growth Marketing team, running experiments is fundamental to the way we work. It's unlikely we would make a change to our website, emails, or ad campaigns without first proving through an experiment that the change will lead to more conversions. We believe in the experimental methodology so much that we’ve made it our goal to run five experiments across web, email, and paid marketing every month. Within paid marketing, we’ve conducted just around 30 paid marketing experiments in the last year alone, which have massively helped us make improvements to our paid marketing channel strategy, as well as incrementally decrease what it costs every time we acquire a new user.

I own and drive our paid marketing programs across Meta, Google, and any other channels we validate. To date, I’ve run 25 experiments in our Adwords account. In this article, I’m sharing how I come up with ideas for experiments and what I’ve learned thus far from running these experiments.

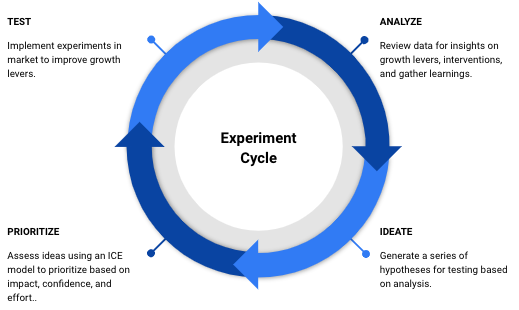

The four stages of a successful marketing experiment

For our team at Buffer, the experiment lifecycle process if four stages. They are:

Analyze: Reviewing data for insights and opportunities across the funnel. Identify where there are existing friction points that hold back our users from converting.

Ideate: Generate as many experiment ideas and hypotheses as possible that would solve the newly found friction points. Each idea should contain the following details:

- The core hypothesis, your assumptions, and learning objectives

- A brief description of the experiment idea and of the variant

- How we’ll measure success, and which metrics will be affected

- An ICE Rating (more on this in the next point)

Prioritize: We assess all of our ideas by leveraging an ICE scoring model. This helps us prioritize all experiment ideas in our backlog prior to committing to them. This is a critical step as there are real opportunity costs with what we choose to test, as every experiment we say “yes” to means we say “no” to another.

Test: Launch the experiment and let it run until we reach a significant statistical difference. We aim for 95 percent confidence before declaring a winner and usually let the experiment run for about two weeks (one cycle).

Then the cycle starts again! The first step is to analyze the results from the test and take away learnings that we can implement into our next experiment.

There are a lot of types of experiments a Marketing team can use, but the most typical one we use at Buffer is A/B testing.

A/B testing is an easy way to test a hypothesis by comparing two options: option A, or the control, and option B, or the variant. It’s a randomized test where 50 percent of people see one option, usually whatever is currently running (control), while the other 50 percent see another new option (variant). This type of test is especially useful for comparing two versions of an ad, landing page, email, or homepage.

My process for coming up with new experiment ideas

My team works in two-week cycles, which means that at the start of each cycle, we plan and prioritize the tasks that we need to complete by the end of the cycle. Conducting an experiment matches up perfectly with that timeline.

On the first or second day of the cycle, I analyze the data, jot down experiment ideas, re-prioritize, and then launch some new experiments.

At the start of the following cycle, I shut down the experiment, extract insights, and then use those learnings to come up with more experiment ideas. Then, we go through the same process again.

There are two ways I come up with new experiment ideas:

- Analyzing the performance of the Adwords campaigns to look for friction points.

- Extracting learnings from the previous experiments and applying them to new experiments.

Here’s more about each of these:

1. Look for friction points

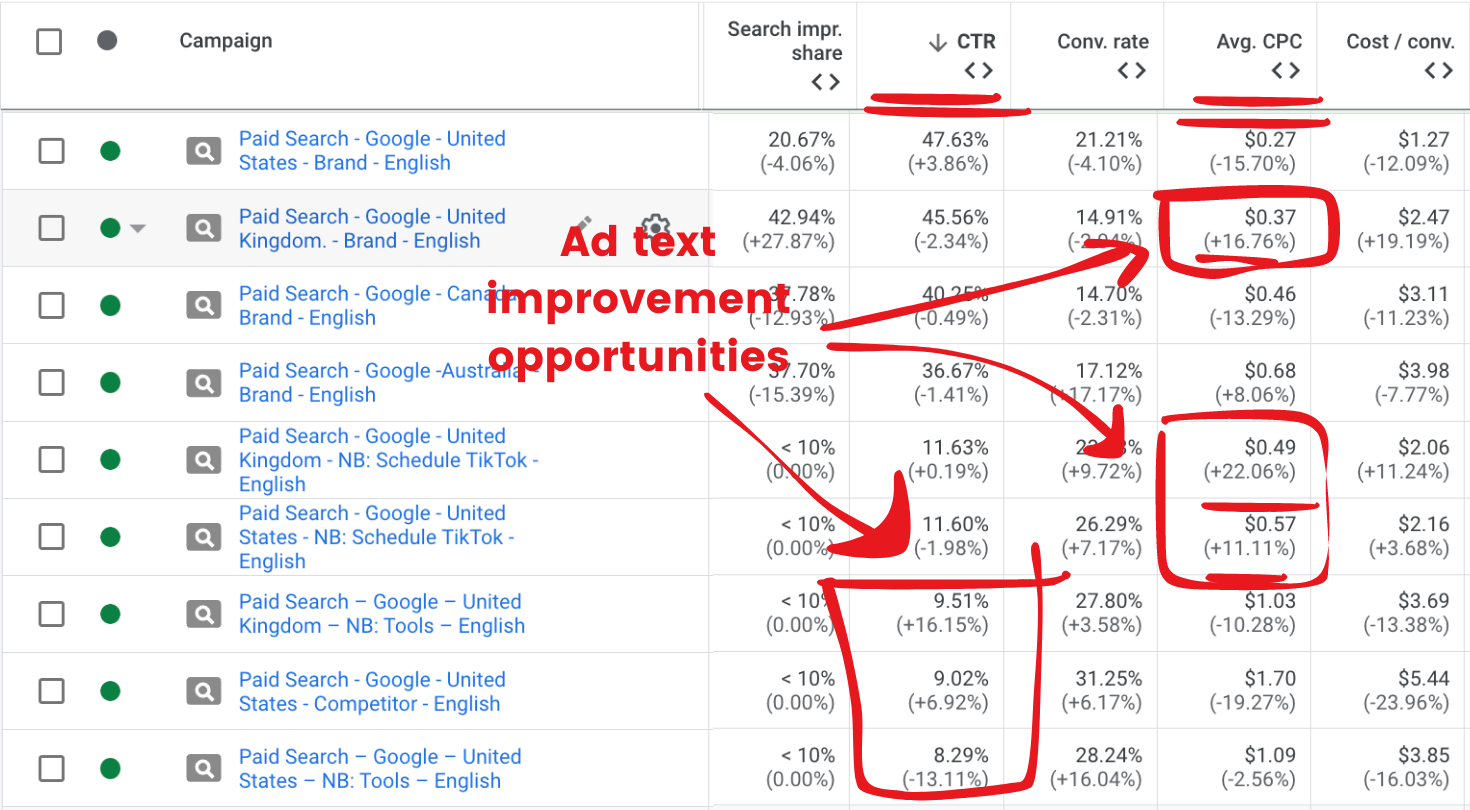

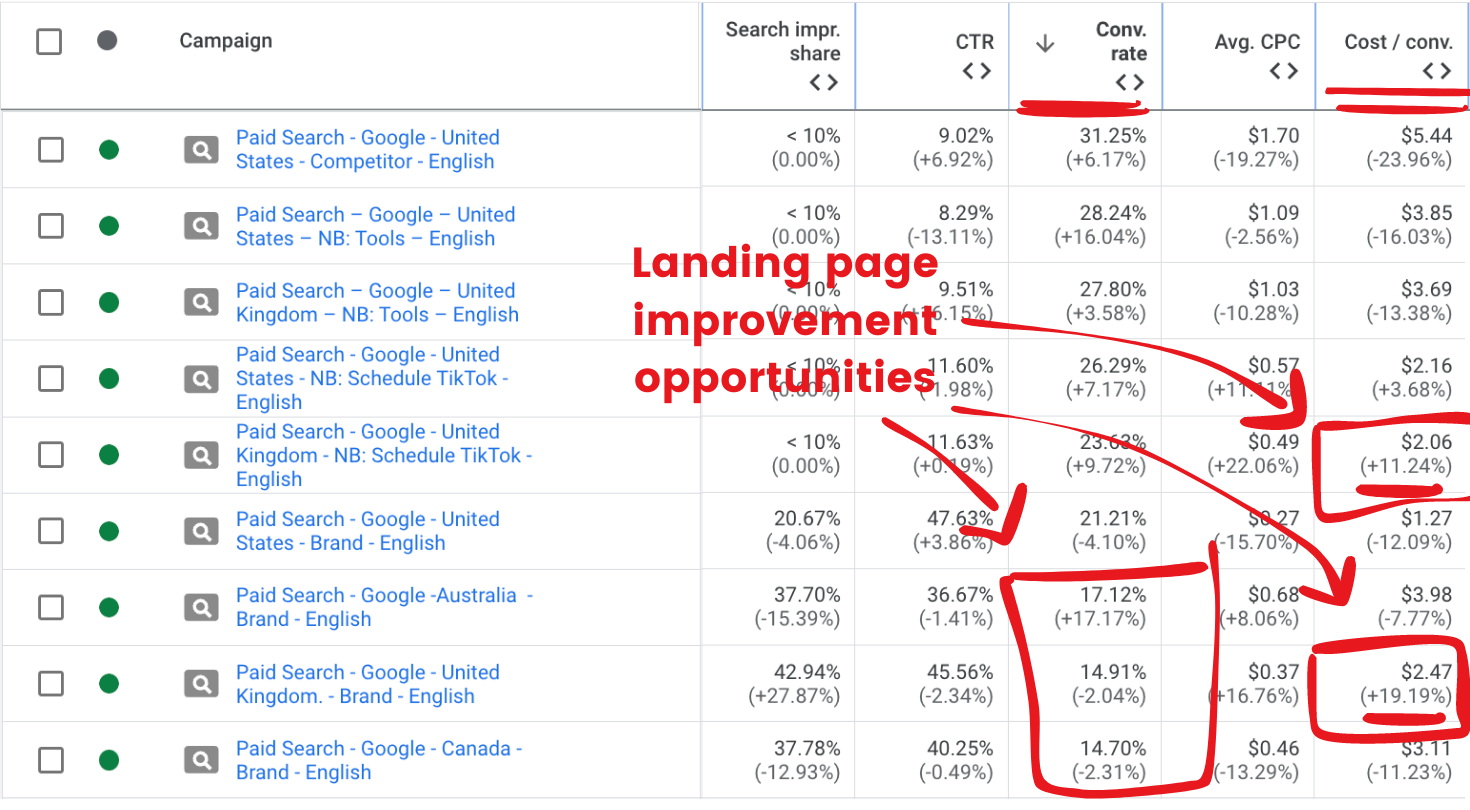

I typically like to compare the campaign’s performance with each other, as well as the current time period with the previous period, to see where there have been increases or decreases in either Search Impression Share, Click-Through Rate, or Conversion Rate.

When looking at those performance metrics, I look at ratios instead of absolute numbers because they help me understand trends and changes in performance better, plus they normalize data across campaigns of different sizes or budgets.

Generally, ratios related to clicks, like Click-Through Rate (CTR) and Cost Per Click (CPL), will tell you that there can be improvements made to your ad text.

Ratios related to your conversions, like Conversion Rate and Cost Per Acquisition, will tell me there are optimizations that could be done on our landing page.

Same as for ad text improvements, I’d look at the landing pages with the highest conversion rates to get inspiration for new experiment ideas.

Here are some other ways you can improve your Conversion Rate:

- Optimize your landing pages by matching the copy and offer to your ad text

- Make your offer more compelling

Lastly, Search Impression Share tells you the percentage of impressions your ads received out of the total impressions they were eligible to get.

If you have a low Search Impression Share, it likely means that your ads aren’t competitive enough in the auctions, your keywords aren’t relevant, or your budget and bids might be too small.

Here are some ways you can improve your Search Impression Share:

- Increase your budget

- Increase your bid amount

- Optimize your keywords by turning off the ones that are not performing well

2. Apply insights from previous experiments to new experiments

Past experiments can tell you a lot of stories about what is successful and what isn’t–all you have to do is go through the numbers to find those stories. I frequently go through the results from past experiments to look for what we changed and how it performed.

This practice keeps paying off. A few weeks ago, we ran an A/B test for a specific campaign where I made updates to our landing page and ad text to better emphasize Buffer’s free plan. My hypothesis was that the Cost Per Acquisition (CPA) would decrease by 10 percent with those changes.

The variant campaign ended up outperforming the control campaign, resulting in a seven percent decrease in CAC and a six percent increase in conversions.

I took these learnings and applied them to another campaign and ended up with the variants winning over the controls as well.

7 lessons learned from running 25 Google Ads A/B tests

Through all of this experimentation, I’ve learned a lot of lessons. Here are seven key takeaways from my experience conducting 25 Google Ads A/B tests:

1. Test ad text, keywords, and squeeze page copy simultaneously ✅

I used to conduct experiments that only A/B tested one variable, i.e. only ad copy, only landing page changes, or only keywords. Right from the start, I noticed that whenever I tested only one variable (i.e. only ad text), without making that change across the entire campaign, the variant would often lose out against the control.

With time, I learned that when conducting an experiment, it’s best to test ad text, keywords, and landing page copy simultaneously. Why is that? It’s key to match the landing page to the promise of your ad, just as it’s important to ensure your ads match the intent of the user’s search query. Therefore, by using your keywords in your ad headlines, ad descriptions, and landing page copy, you show users that your ad is directly relevant to their search and that the landing page is relevant to the ad they clicked on.

As an example, if you’d like to A/B test the effect of adding the sentence “14-day free trial” to your ad text, you should also include that sentence in new keywords, as well as add that sentence to a few places in your landing page rather than just add it to your ad text. This will make for a much more successful experiment.

2. Keeping an eye on what your competitors are doing is key ✅

Staying up to date with your competitors' changes and updates can expose new opportunities for your own strategy. As an example, on January 23, 2023, we noticed a lot of chatter about Hootsuite on Twitter about the fact that they’ve decided to discontinue their Free plan on March 31, 2023. With Hootsuite’s removal of their free plan, we knew that it would lead more and more people to search for free, generous alternatives; we also knew that we were perfectly placed to attract those people to Buffer.

With this in mind, it made sense for us to increase our budgets for our competitor campaign, which specifically targeted keywords like “free hootsuite alternatives” and “free version of hootsuite.” In addition to this, we also began to test the efficacy of mentioning our free plan in our other campaigns and found success with that as well.

You can also look for trends in your competitor’s ad copy and landing pages to inspire your own experiments.

3. Use the highest-converting keywords across your campaigns ✅

Analyzing our top-performing keywords has given us crucial insights into what words resonate with our audience. This can be a useful strategy to guide the creation of ad text, landing page updates, and even new campaigns.

As an example, a few months ago, I sorted our top keywords by lowest Cost Per Lead (CPL) and found these keywords to be the most common:

- scheduler was found in 12 of our top keywords

- tiktok was found in nine of them

- free was found in seven of them

- Instagram was found in six of them

- tools was found in five of them

- viral and hootsuite was found in three of them

Based on this finding, we ended up running a series of experiments that A/B tested the addition of the word “free” in keywords and copy. This was a major finding for us as we were able to massively increase our Conversion Rate simply by promoting our free plan.

4. Make the ad more relatable by mentioning the country they are located in ✅

We noticed that by adding country-specific references in our ad copy, we made our ads more relatable and engaging, which led to higher-quality ad rankings and increased impressions and clicks.

As an example, in one of our A/B tests for a campaign in Australia, we added Australia-specific references. The control campaign had no references to Australia, while the variant included Australian references in the ad text, URL, headline, and customer testimonial.

Here is the landing page of the control:

Here is the landing page of the variant:

By building the ads around a specific country, we increased click-through rates and conversion rates, which means that we were able to connect better with the people seeing our ads.

5. Follow an iterative process for A/B testing ✅

Running marketing experiments is an iterative process, with each test building upon the results of the previous test. In fact, even a failed test is an opportunity to understand what went wrong and ensure those mistakes aren’t repeated. By applying the insights of your experiments to the next ones, you’ll be increasing the likelihood that your next experiments will succeed. I do this by writing a summary of the results within the brief at the end of each experiment, which includes a conclusion and recommendations for follow-up experiments. I also like to keep a running document of insights from all of the experiments we’ve run. It’s helpful to be able to go back to that document to extract new ideas for upcoming tests

Lastly, don’t keep all this knowledge just with the people you directly work with. I often share my learnings with other teams, especially the Product team and the Product Marketing team, as they can apply a lot of the insights to their own experiments, campaigns, and product features.

6. Leverage Responsive Search Ads ✅

With Google’s responsive search ads feature (RSAs), you can create an ad that adapts to show more relevant messages to users. This feature uses machine learning to determine the best-performing combination of headlines and descriptions to present to a specific user.

I find this feature to be incredibly successful because it takes the burden of testing off of the advertiser, allowing for more efficiency and more time saved. This is also suggested by Google, as they mention that RSAs “may improve your campaign’s performance.” Likely because Google uses machine learning to determine the best combination of headlines and descriptions, which should, in theory, increase ad relevance and performance.

The other great thing about responsive search ads is that Google will show headlines and descriptions that are more relevant to the user’s search query, thus offering a more personalized experience.

7. Include “Jobs To Be Done” and benefit-focused copy across your ads and landing pages ✅

We've found that using copy that focuses on the benefits of our product and on the “Jobs to Be Done” framework performs well in both ads and landing pages.

This type of copy directly addresses customer needs and speaks to how our product can solve their problems.

In my experience, data points in marketing are more than just numbers; they tell a story, revealing insights and lessons that can guide strategic decisions. As marketers, our role involves reading this data, extracting these narratives, and continually testing and refining our strategies.

We’ve been able to attribute some of Buffer's recent growth to this experiment-first approach. The more we test, the more we understand our audience, thus enabling us to continuously refine our marketing strategies for success.

I hope these lessons can help you with any paid marketing or experiments that you implement into your own marketing strategies.

Try Buffer for free

190,000+ creators, small businesses, and marketers use Buffer to grow their audiences every month.