When Buffer first introduced video on our platform, it was quite clear that the feature would be a perfect fit for our mobile users.

We know that videos can be an important part of a social media strategy, so making sure this rolled out to our whole audience was important to us.

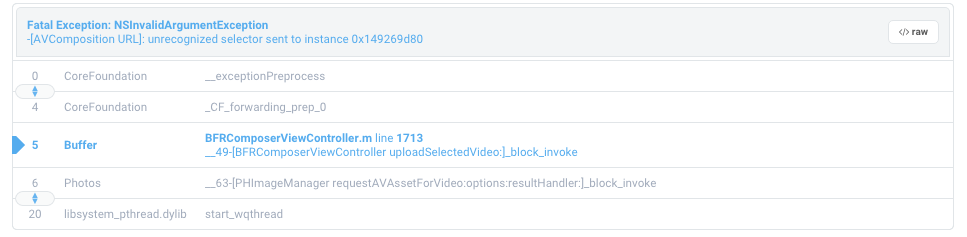

Fortunately, we were able to get things moving quite fast on mobile. Uploading videos to Buffer on iOS worked great—but then one day Fabric let us know that we had likely missed an edge case:

We weren’t immediately sure of the cause. When I began to dig deeper, though, the culprit was clear: slow motion videos on iOS.

Here, I’d love to take you though the process of how I discovered a strategy to deal with slow motion videos on iOS??!

Walking up the call stack

As with any issue that I tackle from a bug report, my first step was to reproduce the problem. This part was a bit challenging, because even though I had the exact line where the exception occurred, I didn’t have any information about the video causing it.

By looking at the call stack, I could see that the heart of the issue was that AVComposition didn’t respond to a URL message. In fact, it doesn’t have any URL data directly associated with it.

The message invoked right before this happened was [PHImageManager requestAVAssetForVideo:options:resultHandler:].

That particular method returns an instance of AVAsset in the completion handler, and for quite some time the way we handled things had worked crash free in production:

NSURL *URL = [(AVURLAsset *)asset URL];

NSData *videoData = [NSData dataWithContentsOfURL:URL];

[self uploadSelectedVideo:video data:videoData:]From looking at this, our first issue was clear—we never expected an instance of AVComposition to be returned! It was also obvious from our crash reports that for a long time, this hadn’t been an issue.

Hmmm ?…

Understanding AVAsset

In my years of iOS development, I haven’t really had to venture too deep into AVFoundation. It’s a big world, and if you are just starting out it can be a bit intimidating to get started with. Thankfully, AVAsset is fairly easy to grasp.

Essentially, AVAsset represents timed audiovisual media. The reason why it didn’t immediately seem familiar to me is that it acts as an abstract class. Since Objective-C has no notion of abstract classes like C# or Java, this can be a “gotcha” as Clang will not show any warnings or compiler errors from code like this:

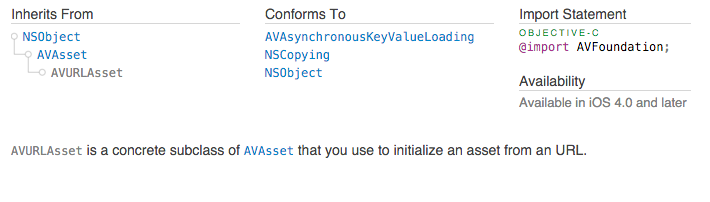

AVAsset *anAsset = [AVAsset new];After a trip to Apple’s documentation, I learned that AVAsset has two concrete subclasses that are used. You can probably guess both of them at this point, but they are:

AVURLAsset is fairly straight forward, but I knew I had more to learn about AVComposition. For example, here is the summary from Apple’s documentation over AVURLAsset:

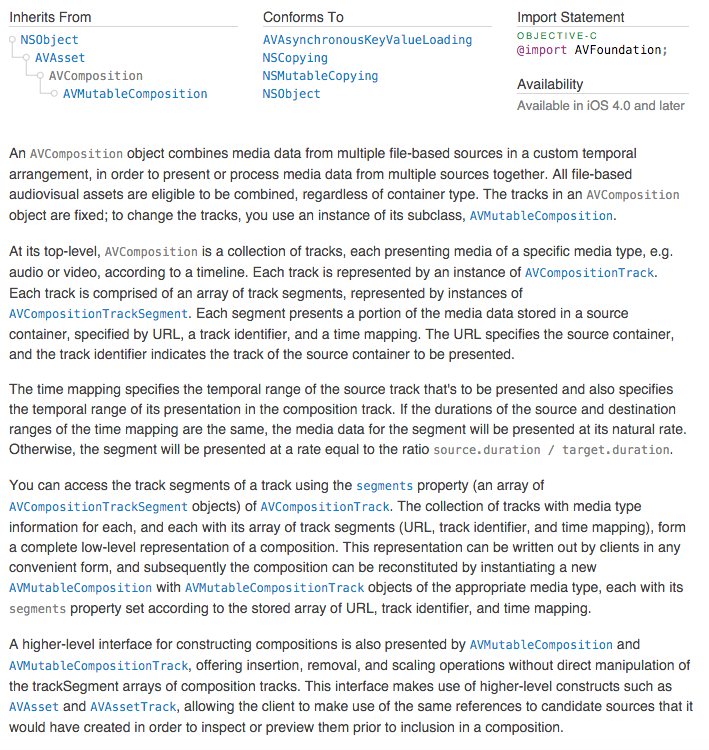

Certainly easy enough, right? And for comparison, here is AVComposition:

Obviously, there is just a tad more going on with AVComposition. Still, I was eager to wrap my head around it!

Reproducing the bug

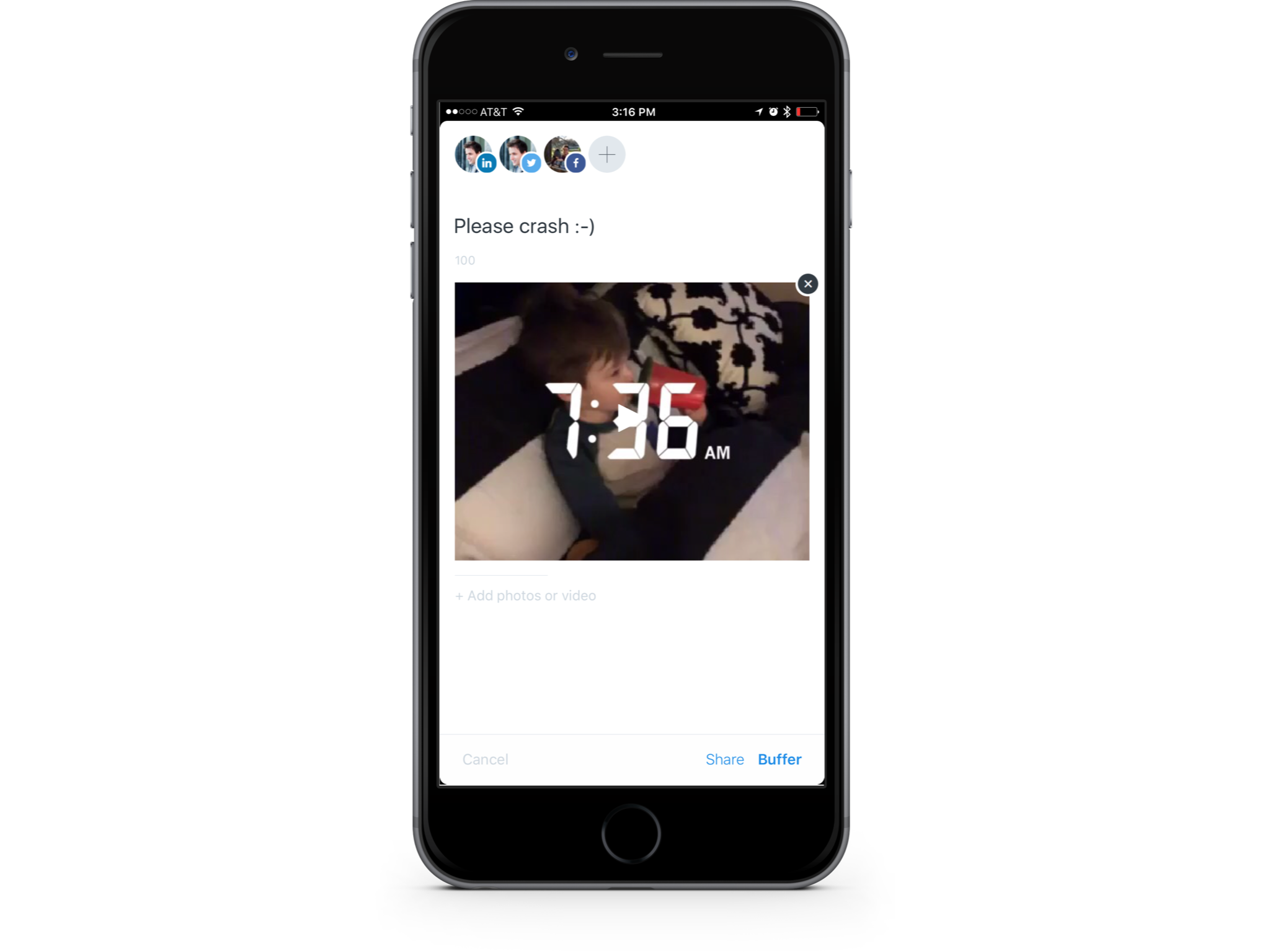

Now that I had a bit more knowledge of the code and classes involved with the crash, I was in a much better position to reproduce it. So, naturally, I started making several test posts that had cute videos of my son attached to them:

After still not having any luck, I reread the class summary for AVComposition. A particular line stuck out:

…combines media data from multiple file-based sources in a custom temporal arrangement, in order to present or process media data from multiple sources together

This was where my “AHA!” moment occurred. AVComposition represents media from multiple sources, and to my knowledge there was really only one candidate for a video to combine media on iOS—slow motion ones.

Sure enough, as soon as I tried to upload a slow motion video to a post, Buffer crashed!

The fix!

At this point, I was in a good position to code up a fix. The questions I had now were:

- How do I retrieve a video from AVComposition

- How do I ensure it’s below our 30 second limit

For starters, I needed to tweak the logic in our completion handler. Now, I knew that we should expect either an instance of AVURLAsset or AVComposition.

This approach may not be bulletproof, but from the several slow motion videos I’ve used, it works like a charm:

BOOL isSlowMotionVideo = ([asset isKindOfClass:[AVComposition class]] && ((AVComposition *)asset).tracks.count == 2);The tracks property on AVComposition is an array of AVCompositionTrackType instances. These are super helpful, as they contain information about all sorts of things like the track identifier, media type, segments and more.

Getting the video length

Now that I knew when I’d be dealing with slow motion videos, my next task was discovering the video’s length. Apple has provided a lot of useful struct types just for this purpose!

In the segments array, the last track comes back as the video while the first represents the audio. Using AVCompositionTrack, I was able to grab the video track and calculate the length using CMTimeMapping.

Getting the actual length of the video takes a few steps, though:

- Get the CMTimeMapping of the video

- Get the starting point of the video, and the ending time

- Use CMTimeGetSeconds to add the two CMTime stucts from the last step

The end result looked like this:

CMTimeMapping lastTrackLength = videoTrack.segments.lastObject.timeMapping;

float videoLength = CMTimeGetSeconds(CMTimeAdd(lastTrackLength.source.duration, lastTrackLength.target.start));Awesome! Now I had a float value representing the track length!

Exporting a slow motion video

The last piece of the puzzle was getting a hold of the NSURL from the video. To upload videos in our codebase, we need an NSData representation of it. Once we have a url, we can get that data using [NSData dataWithContentsOfUrl:].

To achieve this, I basically went through a three-step process:

- Create an output URL for the video

- Configure an export session

- Export the video and grab the URL!

After it was all said and done, the implementation of this step looked like this:

//Output URL

NSArray *paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES);

NSString *documentsDirectory = paths.firstObject;

NSString *myPathDocs = [documentsDirectory stringByAppendingPathComponent:[NSString stringWithFormat:@"mergeSlowMoVideo-%d.mov",arc4random() % 1000]];

NSURL *url = [NSURL fileURLWithPath:myPathDocs];

//Begin slow mo video export

AVAssetExportSession *exporter = [[AVAssetExportSession alloc] initWithAsset:asset presetName:AVAssetExportPresetHighestQuality];

exporter.outputURL = url;

exporter.outputFileType = AVFileTypeQuickTimeMovie;

exporter.shouldOptimizeForNetworkUse = YES;

[exporter exportAsynchronouslyWithCompletionHandler:^{

dispatch_async(dispatch_get_main_queue(), ^{

if (exporter.status == AVAssetExportSessionStatusCompleted) {

NSURL *URL = exporter.outputURL;

NSData *videoData = [NSData dataWithContentsOfURL:URL];

// Upload

[self uploadSelectedVideo:video data:videoData];

}

});

}];Going back a little, you don’t have to worry about any of this if you are returned an AVURLAsset back from the completion handler. All that’s required in that case was the code I shared above that we had been using previously.

Wrapping up

This was certainly one of the more rewarding bugs I have come across and fixed so far at Buffer. It didn’t seem that there was much talk about it among the community, so diving in and relying solely on Apple’s docs was a good reminder that they are always a great place to start!

If you’ve run into a snag with slow motion videos on iOS, I certainly hope this helps you out! It was my first pass at it, so I’d love to know if you have any suggestions on how to improve it.

Are there some obvious ways to optimize it? I’d love to learn from you!

Try Buffer for free

180,000+ creators, small businesses, and marketers use Buffer to grow their audiences every month.

Related Articles

We’ve always had teammates who have side projects in addition to working at Buffer. Working on new projects, building, experimenting, and self-improvement are built into our DNA as a company. So it's quite common to be speaking with someone only to find out they own a small business outside of Buffer that started as a side project. We also have several published authors and many apps built by Buffer teammates. We believe this entrepreneurial spirit and creative drive not only enhances our indiv

Every year since 2016 we've closed Buffer for a week at the end of the year. It’s like a reset, except across the whole company.

In this article, the Buffer Content team shares exactly how and where we use AI in our work.