History and Background

Ever since Buffer started its journey with Kubernetes (k8s) almost 1 year ago we have embarked on a mission to streamline the deployment experience. The inherent nature of microservices differs significantly from a monolith application in many ways. For each deploy, a monolith application requires merging the changes and deploying to an environment that’s well defined, tested and proven production ready. More than often, a dedicated team is assigned to be the guardian of the production environments. People understand this flow well already as it has been the way for many years.

On the other hand, microservice deployments may seem more complicated. Each deployment involves building a new Docker image, tagging and pushing to a Docker Registry, then composing Kubernetes specific yaml files that describe network/resource configurations as well as credentials. The paradigm shift brought a lot more flexibility to our deployment strategy, and we as an early adopter were quite happy to do everything manually without too much automation.

After a few months we had a few engineers who were well-versed in manually deploying services to k8s. This was great! As a logical next step, we started thinking about how to bring a more consistent deployment experience from monolith architecture to k8s, and push for team wide adoption.

Challenges

Okay! Enough for the background and boring technology comparison. Let’s start with some real world challenges which I believe are true to every company in this kind of transition.

Challenge 1 – The need for k8s yaml files for each microservice

The division of labor in many tech companies draws a line between Product Engineering and DevOps. As a result, asking product engineers to create yaml files could lead to more friction, as these files declares the runtime configurations like computing resource requirements, service discovery and network ports. We are no exception to this. While many courageous product engineers jumped in learning everything needed to deploy their services, we still feel the process could be further simplified to hit a greater team wide adoption.

Challenge 2 – The entirely different technology stack for deployment

Our monolith application deployment is based on git commits. When we start a production deploy a triggered Jenkins job will checkout from Github and deploy the HEAD of the master. We have been enjoying the convenience in deployment that AWS has brought to us. The Jenkins job for it is simple because it only needs to checkout the codebase and use the AWS CLI to deploy code changes to Elastic Beanstalk. However, in k8s, the deployments are based on Docker image tags and yaml files, and there isn’t any out-of-box automation provided by AWS. Since we are dealing with two very different technologies we can’t very well port the existing deployment automation directly to k8s. We will need something new, or more encompassing.

Challenge 3 – Handling deployment rollback

Rolling back is easy for our monolith application on AWS Elastic Beanstalk. You simply go to the AWS console, select a git commit version to roll back to and bam! It’s done. This is not the same story for k8s. Since k8s is our self-hosted infrastructure we had no choice but to implement our own console that does the same thing AWS does for us. Because these challenges are real and directly impact the rate of adoption. We started working on automation tools that we believe would be a good investment to the move to k8s.

Kuberdash

Getting the entire engineering team to learn how Kubernetes works and how to use it’s kubectl cli is a big ask. We realized this represents a completely different experience and a steep learning curve is inevitable. To address this, our Systems Engineer Adnan created a new webapp called kuberdash, which aims to resemble the similar deployment experience. There are a few key features of kuberdash:

- Manage services that can be deployed using our Slackbot command

- Control the deployment process from Docker image build to patching yaml file on k8s cluster to use the new image. This allows us to do new deployment or rollback.

Alright, we are very honest abut this is a MVP

Creating a Slack Command

We have been using Slack command to make monolith deployments and that’s something we are most familiar with (for now). As the above screenshot shows, the UI helps creating a Slack command that allows service deployments from Slack, which is similar to what we have for monolith deployments.

Rollback

We are not saints, and we are bound to make mistakes. A good production deployment service should always support rollback, usually just to the previous version. Kuberdash does a bit more than that by making all historical deployments available. This rollback feature puts our minds in ease as we know we can easily go back to a stable version if something is wrong.

Recap

Key takeaways

- kuberdash eliminates the need for product engineers to create Slack commands by themselves for their services. It’s a plug and play when you have the docker image and yamls files ready. – Challenge 2

- Kuberdash makes rollback much easier. – Challenge 3

Kuberdash has been a success! The effort to create a similar deployment experience with the monolith application paid off. Our engineers embraced it fully! All credits go to Adnan for building it out and iterating on it!

CI/CD with Helm and Jenkins

Then we wanted something more. We wanted to see if we could further automate deployment for testing environments, ideally per each branch created. We then came across this demo (thanks to Dan for passing it) that sparked a discussion internally, and decided to give it a try. There are a few key components for it to work.

Please note the post is intended to be on a conceptual level rather than being a step by step, water-proof guide. To make the most of out of this post, you may want to get a background knowledge of Helm, Helm Chart and Jenkins Pipeline.

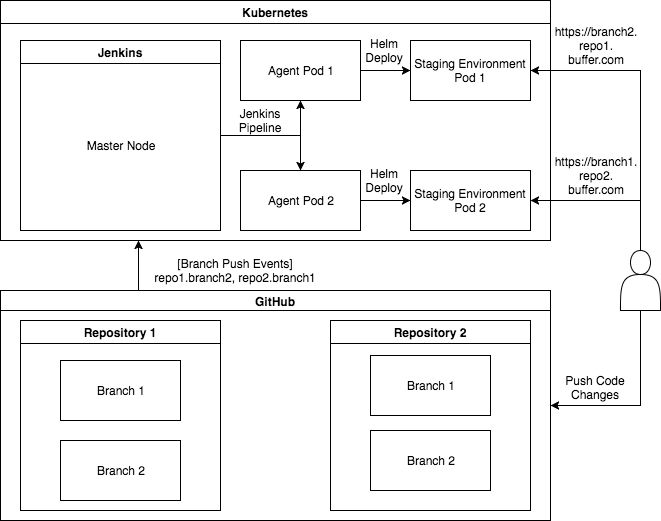

This is a high level illustration

Kubernetes Cluster

This is a must. Not as a deployment destination, but also for powering the CI/CD Pipeline in Jenkins. We kind of like this approach because it embodies the idea of containerizing everything. At the same time it saves money from hosting a dedicated EC2 instance.

Jenkins on Kubernetes Cluster

We found the easiest way of getting Jenkins on k8s was using Helm. Fortunately a stable Jenkins Chart is available for Helm deployment. We could use this one liner to deploy.

helm --namespace jenkins --name cicd -f ./jenkins-values.yaml install stable/jenkinsWe use these override values for the Helm Chart deployment

Master:

Memory: "512Mi"

HostName: jenkins-k8s-us-east1.buffer.com

ServiceType: ClusterIP

InstallPlugins:

- kubernetes:0.12

- workflow-aggregator:2.5

- credentials-binding:1.13

- git:3.5.1

- pipeline-github-lib:1.0

- ghprb:1.39.0

- blueocean:1.1.7

- greenballs:1.15

- slack:2.2

- saml:1.0.3

- generic-webhook-trigger:1.19

ScriptApproval:

"method groovy.json.JsonSlurperClassic parseText java.lang.String"

"new groovy.json.JsonSlurperClassic"

"staticMethod org.codehaus.groovy.runtime.DefaultGroovyMethods leftShift java.util.Map java.util.Map"

"staticMethod org.codehaus.groovy.runtime.DefaultGroovyMethods split java.lang.String"

Ingress:

Annotations:

..class: nginx

Agent:

Enabled: false

rbac:

install: true

# RBAC api version (currently either v1beta1 or v1alpha1)

apiVersion: v1beta1

# Cluster role reference

roleRef: cluster-adminIf you are on k8s version 1.6 or higher with RBAC enabled. You will need to bind a role to the default Jenkins service account. Something like this worked for us.

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: jenkins-cluster-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: default

namespace: jenkinsHooray! You should now have a running Jenkins server on your k8s cluster. Let’s see how we could make it do our biddings!

GitHub Repo with a Jenkinsfile

This is the meat of this workflow. Pretty much all the logic for deployment goes in here, and you see, that’s the beauty of it! We can now flexibly program our CI/CD Pipeline and all the logic is stored in GitHub repos instead of in the Jenkins installation. This saves time from setting up fresh Jenkins jobs that usually involves a lot of forms to fill. If you had done that before, you will probably agree that’s quite tedious and prone to error. In the contrary, if you have a working Jenkinsfile you can bring it to any Jenkins installation and in a snap of fingers, you will see some lovely green like this. Isn’t it nice?

You will need a Jenkinsfile like this in your repo

#!/usr/bin/groovy

@Library('github.com/bufferapp/k8s-jenkins-pipeline@master')

def pipeline = new com.buffer.Pipeline3()

pipeline.start('Jenkinsfile.json')Simple huh? Well, that’s because all the magic is hidden in a public repo github.com/bufferapp/k8s-jenkins-pipeline. What it means is we could encapsulate the CI/CD logic as a library to be shared amongst services. This allows for an easier adoption because Jenkinsfile is made to be generic. Engineers just need to copy 6 lines of code as a Jenkinsfile to their service repo and viola, it just works.

Please note in Line 6 we pass a configuration file (Jenkinsfile.json) to the library, which I will explain in a later section.

Let’s take a look at what does Pipeline3 do. Please follow the link to the code section of interest. A full version can be found here

- Setting up a running agent node on Jenkins

- Getting the image tag based on the GitHub branch and commit

- Pre-build script for uploading static assets

- Building and pushing the built image to Docker Registry

- Deploying the pushed image to k8s using Helm

I’d like to give the credit to Lachlan Evenson as I took most of the logic from his work and tweaked for our uses. I’m forever grateful for the open and sharing community of Kubernetes.

Configuring CI/CD Pipeline

While all the logic seems to be generic you must be wondering where the configurations are at. The CI/CD Pipeline does take a config file from the service repo, as you can see in here. The configuration file can be as simple as

{

"app": {

"name": "buffer-publish",

"namespace": "buffer",

"deployment_url": "publish.buffer.com",

"test": true

},

"container_repo": {

"repo": "bufferapp/buffer-publish",

"jenkins_creds_id": "dockerhub-credentials"

},

"pipeline": {

"enabled": true,

"debug": false

}

}Jenkinsfile.json specifies

- Deployment related information, used by Helm

- Docker Registry related information, used for docker build and docker push

- Pipeline execution configurations

It’s worth to note jenkins_creds_id specifies the Dockerhub (or other Docker Registry of your choice) credentials that’s stored in Jenkins.

Helm Chart

Alrighty, you now have a running Jenkins server, a working CI/CD Pipeline that’s configured correctly. What’s now?

Now is a good time to define the Helm Chart for your repository. Here is a great example by Lachlan Evenson. The CI/CD Pipeline should find the Helm Chart template directory located inside the repo. Since the yaml files are templated it also helps with adoption. Engineers are generally happier to copy the entire template over and only swap out the values that are only related to their services.

Profit!

Once you setup the GitHub hook is all set, pushes to branches or master will be automatically deployed to staging environments. While the URL is basically the branch name with / replaced by –. Again, this is a logic you can flexibly change in the CI/CD Pipeline.

Deployed!

Engineers are now happily pushing their code changes, and have the peace of mind that things are not broken before production. This is especially empowering to engineers who are just starting with k8s. If you like, you could even include the link of the staging environment in your PR to make the reviewer’s life easier.

Recap

Key takeaways:

- Templated yaml files using Helm Chart reduces the friction from having engineers learning about the dark magic of k8s – Challenge 1

- The use of Jenkins Pipeline and Helm allows for programmable build strategy, making the technological switch to deploying to k8s easier – Challenge 2

- Having the ability to do CI/CD on k8s is just way too cool. Product engineers are now able to focus on the application logic and forget about the code deployment. Simply by going to deployed URL and see their hard work there. No upfront, no hidden cost, just sheer excitement! – Challenge 2

Over to You!

At the time of this writing we are still in a discussion whether we should start using Helm CI/CD with Jenkins for production deploys or continue using Kuberdash, since it supports rollback out-of-box. Although we haven’t reached a conclusion yet, we agreed to incorporate some of the cool technologies like Helm, templated yaml files, and k8s powered Jenkins to Kuberdash. It’s still too early to say if one will replace another so we are just happy to let both evolve over time. The deployment process to k8s remains to be a very uncharted area and companies are trying hard to find a good one that works for them. I personally believe only through more discussions we as a community will discover better solutions together. If you happen to have any advice, thoughts or questions we are all ears! Let’s get the discussion going!

Thanks for reading this far! I’m happy to answer any questions you might have by email: steven@buffer.com or twitter: @stevenc81. ?

Try Buffer for free

140,000+ small businesses like yours use Buffer to build their brand on social media every month

Get started nowRelated Articles

As part of our commitment to transparency and building in public, Buffer engineer Joe Birch shares how we’re doing this for our own GraphQL API via the use of GitHub Actions.

We recently launched a new feature at Buffer, called Ideas. With Ideas, you can store all your best ideas, tweak them until they’re ready, and drop them straight into your Buffer queue. Now that Ideas has launched in our web and mobile apps, we have some time to share some learnings from the development of this feature. In this blog post, we’ll dive into how we added support for URL highlighting to the Ideas Composer on Android, using Jetpack Compose. We started adopting Jetpack Compose into ou

With the surprising swap of Elasticsearch with Opensearch on AWS. Learn how the team at Buffer achieved secure access without AWS credentials.